How Plaquette enables quantum hardware manufacturers to simulate, analyse & optimise quantum computing architectures.

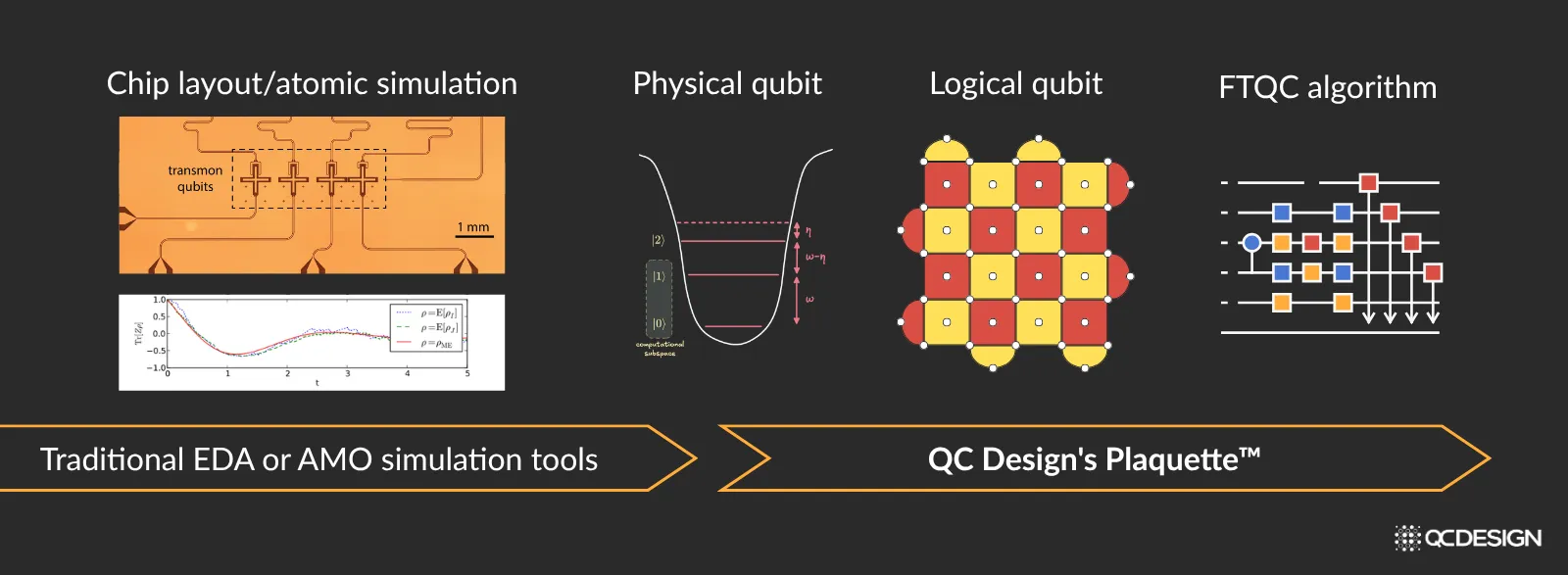

Plaquette is a software tool that’s used by quantum hardware manufacturers to design fault tolerant quantum computers. This design workflow has several steps, and Plaquette picks up where the tools that are used to design physical qubits leave off.

For companies working on superconducting qubits, photonics, or spin qubits, the early stage of the design workflow might include traditional EDA tools for designing chips (some examples are tools from Cadence, Synopsis and Ansys). For companies working on neutral atoms and trapped ions, it might be more in the direction of atomic simulations, usually performed in-house. These tools provide hardware manufacturers with designs for physical qubits and information about the errors that act on these physical qubits when quantum gates are applied on them.

In the next stage of the design workflow, hardware manufacturers need to arrange vast numbers of physical qubits to form logical qubits. This is where Plaquette steps in, helping design the best possible logical qubits by taking into account many design considerations, including hardware-specific errors.

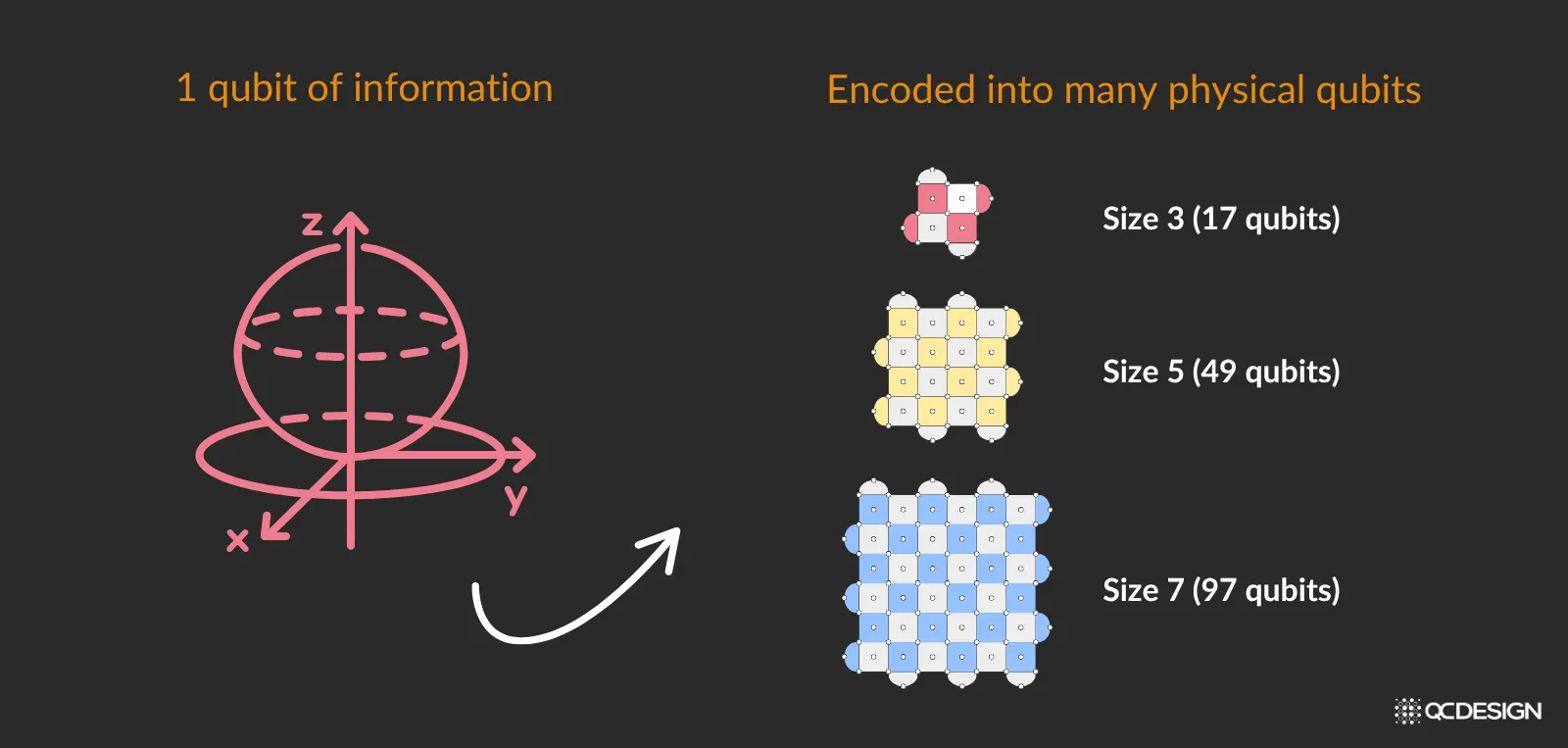

To understand what makes this stage so challenging, we need to look at how information is encoded and protected in a fault-tolerant system. The key concept here is the logical qubit, the fundamental building block that allows quantum computers to suppress errors and scale.

What’s a logical qubit?

A logical qubit takes one qubit of information and encodes it into many physical qubits. Consider the example of a surface code. The “size” of the encoding of a surface code qubit can be quantified by a number called the “distance”. In the example below, we can see surface codes of distance, 3, 5, 7. One qubit of information is encoded into logical qubits comprising 17, 49 or 97 physical qubits, respectively.

Logical qubits are the key to fault tolerance because they suppress imperfections. But logical qubits still have errors, and understanding the nature of these logical errors is critical.

Logical errors

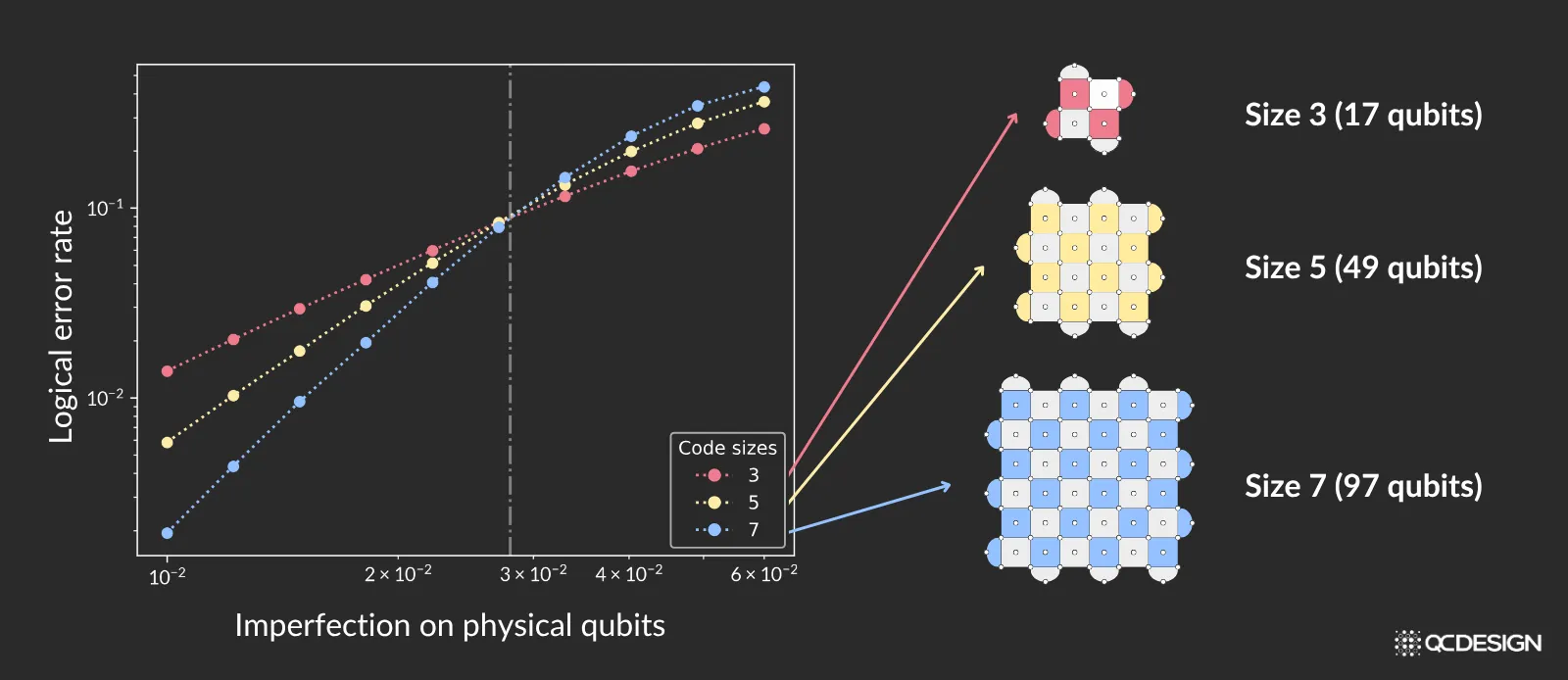

Logical qubit errors depend on the size (i.e. distance) of the logical qubit and the imperfections on the physical qubits within it. The way in which these are related can be seen in the figure below.

On the horizontal axis, we see the size of the imperfections on the physical qubits. On the vertical axis, we see the resulting size of the logical errors (notice that this is a log-log plot). Different curves represent different logical qubit sizes. We’ll discuss the details in a moment.

This plot is the so-called threshold plot. Threshold plots are so important in fault tolerant hardware design that hardware manufacturers find themselves simulating threshold plots day in and day out.

So let’s dive a bit deeper into this plot.

Threshold plots

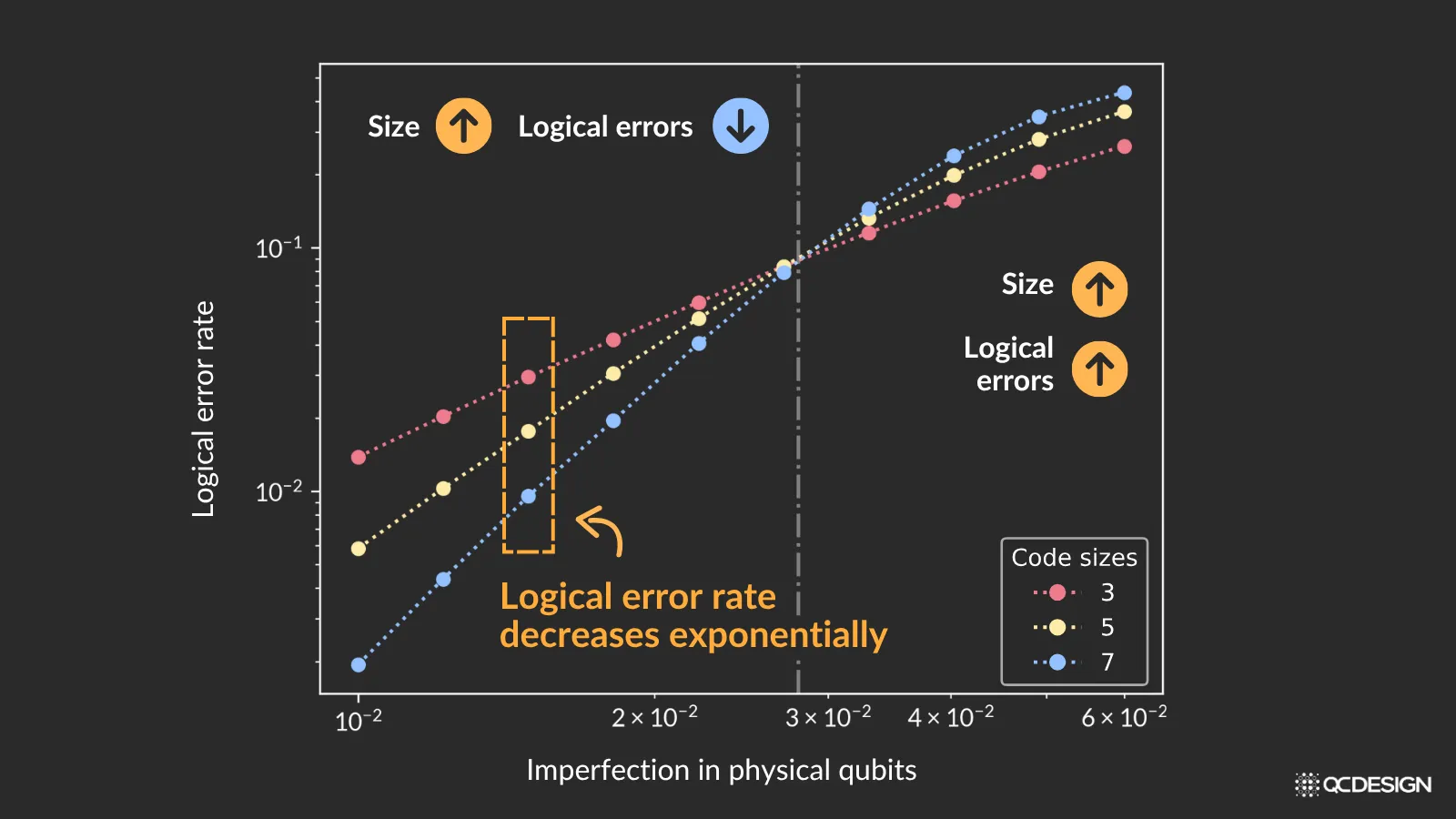

On the threshold plot, there’s a special point, which we’ve denoted with a dashed vertical line.

Let’s start by looking at the plot to the right of this vertical line. This is where the qubits are quite noisy. Notice that the blue curve (code size = 7) is above the yellow curve (code size = 5), which is above the red curve (code size = 3). This tells us that if you make the logical qubit larger (increase the code size), by adding more physical qubits, the error in the logical qubit actually goes up. That’s not what we want to see in our system.

So what about to the left of the dashed vertical line? Something very interesting happens here: the behavior inverts. Now the red curve (code size = 3) is on the top, and the blue curve (code size = 7) is on the bottom. This tells us that as you increase the size of the logical qubit, the error on the logical qubits goes down. This is great.

What’s more, the logical error rate decreases exponentially for a fixed physical error rate. This is not just great, it’s excellent!

Why being below threshold is such a big deal

Say someone wants to implement an algorithm with millions of gates acting on their logical qubits. The hardware will need very low logical error rates, perhaps one part in a million or smaller. As long as you’re on the left side of the threshold, it’s possible to make the logical error as low as you need merely by throwing more physical qubits at the problem. This is great news for hardware manufacturers, because the other option—decreasing imperfections in the physical qubits themselves—is a much harder engineering challenge. Sometimes it’s not even allowable by the laws of physics.

This is why quantum hardware manufacturers are very interested in tracking different imperfections on their system as well as the tolerances of their architectures to these different imperfections.

But there’s a challenge, which is that different architectures have different imperfections, and different imperfections have different thresholds.

What’s an architecture?

In our neck of the woods, an architecture is defined by four things: (1) how the qubits are connected together using an error correction code, (2) how errors are found and corrected using an error decoding algorithm, (3) how qubits are defined from different energy levels or potentially different locations or timings of photons on the hardware and (4) how qubits are controlled using different pulse sequences or interactive gave guides for photons.

Error correction and decoding

Plaquette comes with a vast library of almost every error correction code and decoder that quantum hardware manufacturers have studied. This includes the standard codes that have been studied for over a decade including surface codes, color codes, Bacon-Shor codes but also more modern qLDPC codes like Hypergraph Product, Generalized Bicycle, Bivariate Bicycle, Lifted Product codes.

Not only can Plaquette simulate these for matter-based platforms, it can also automatically ‘foliate’ these codes into their measurement- or fusion-based versions that are relevant for photonic quantum computing. In terms of decoding, Plaquette comes with state-of-the-art decoders such as PyMatching, FusionBlossom, Union-Find, and several Belief-Propagation-based decoders that are crucial for qLDPC codes.

Why architecture choice matters

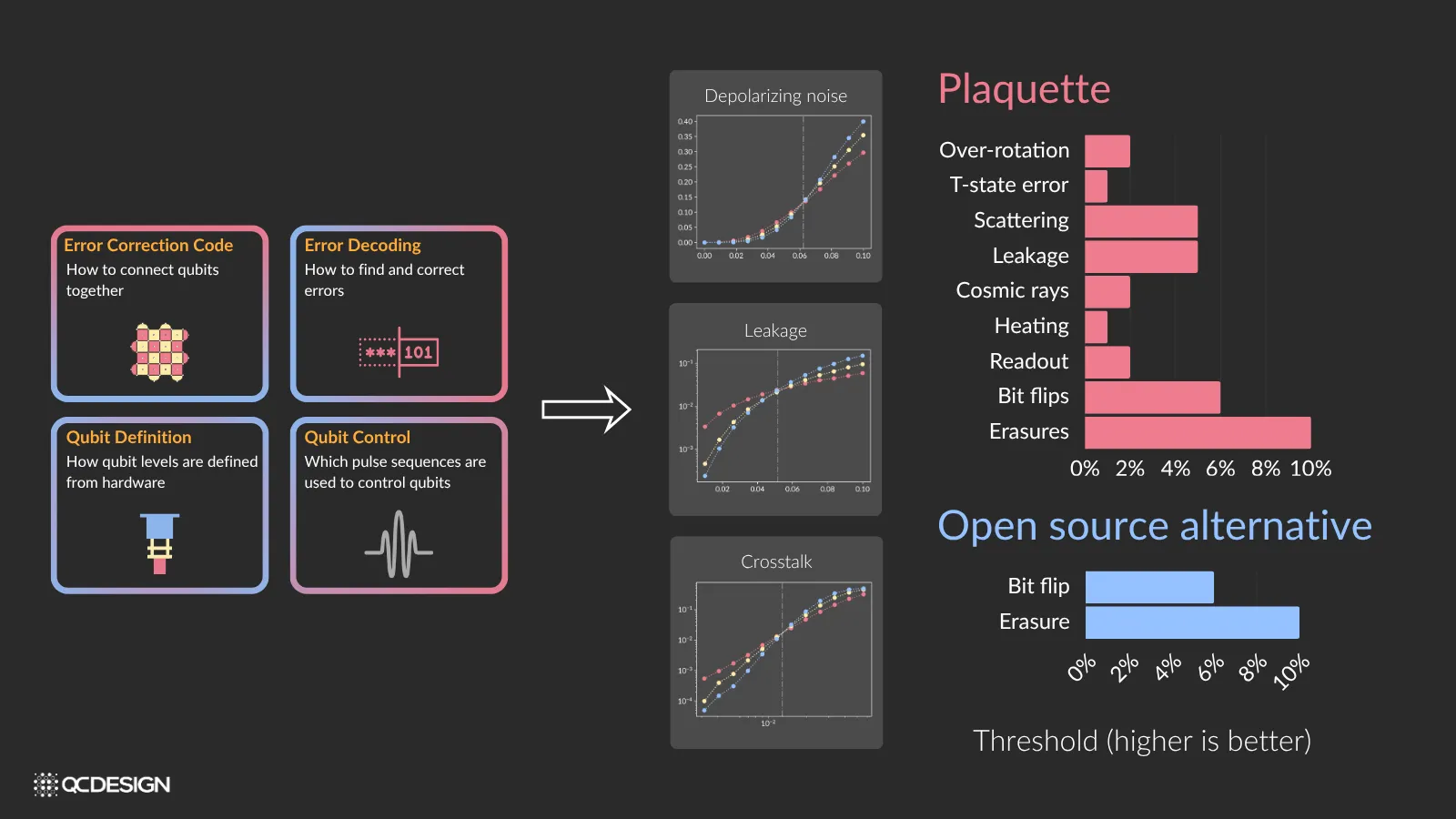

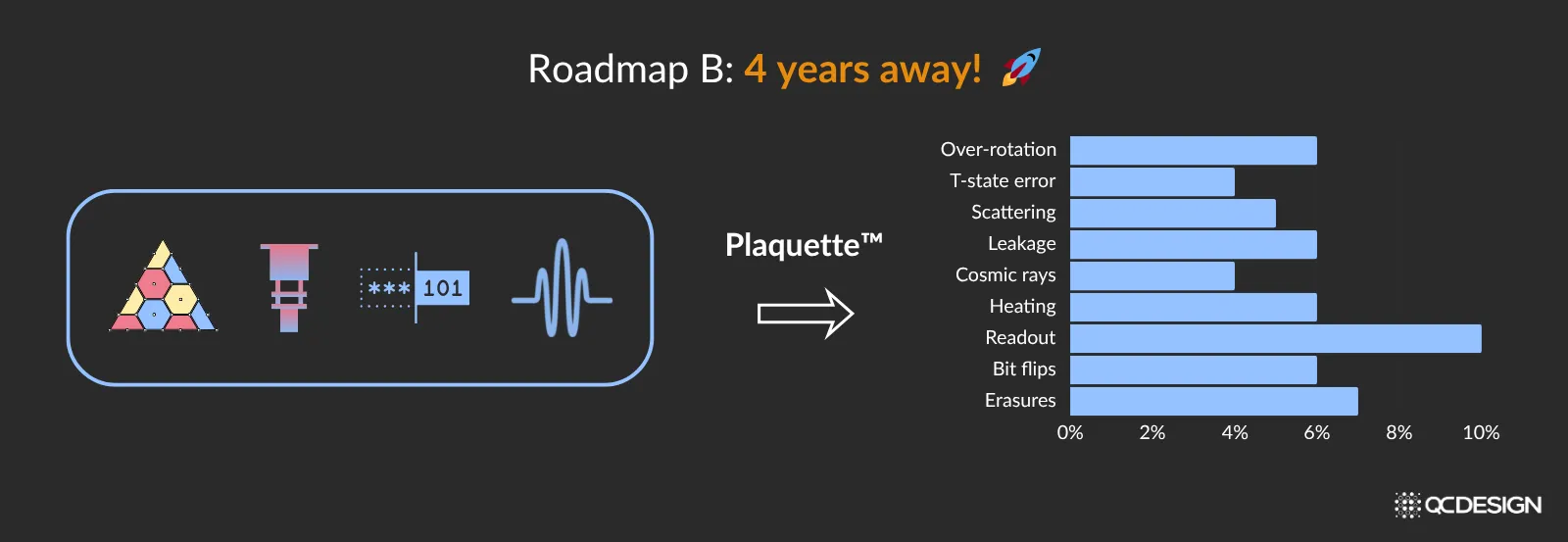

Different ways of building quantum hardware can have different imperfections, where each imperfection has its own threshold. And it’s essential to know them all.

Plaquette helps hardware manufacturers find these thresholds, with respect to any imperfection in their hardware. Open source options exist, but they only provide thresholds with respect to a couple of imperfections, whereas Plaquette can provide thresholds with respect to any of the 20 or more imperfections that hardware manufacturers have in their systems.

It can do this thanks to five different simulators.

Five different simulators

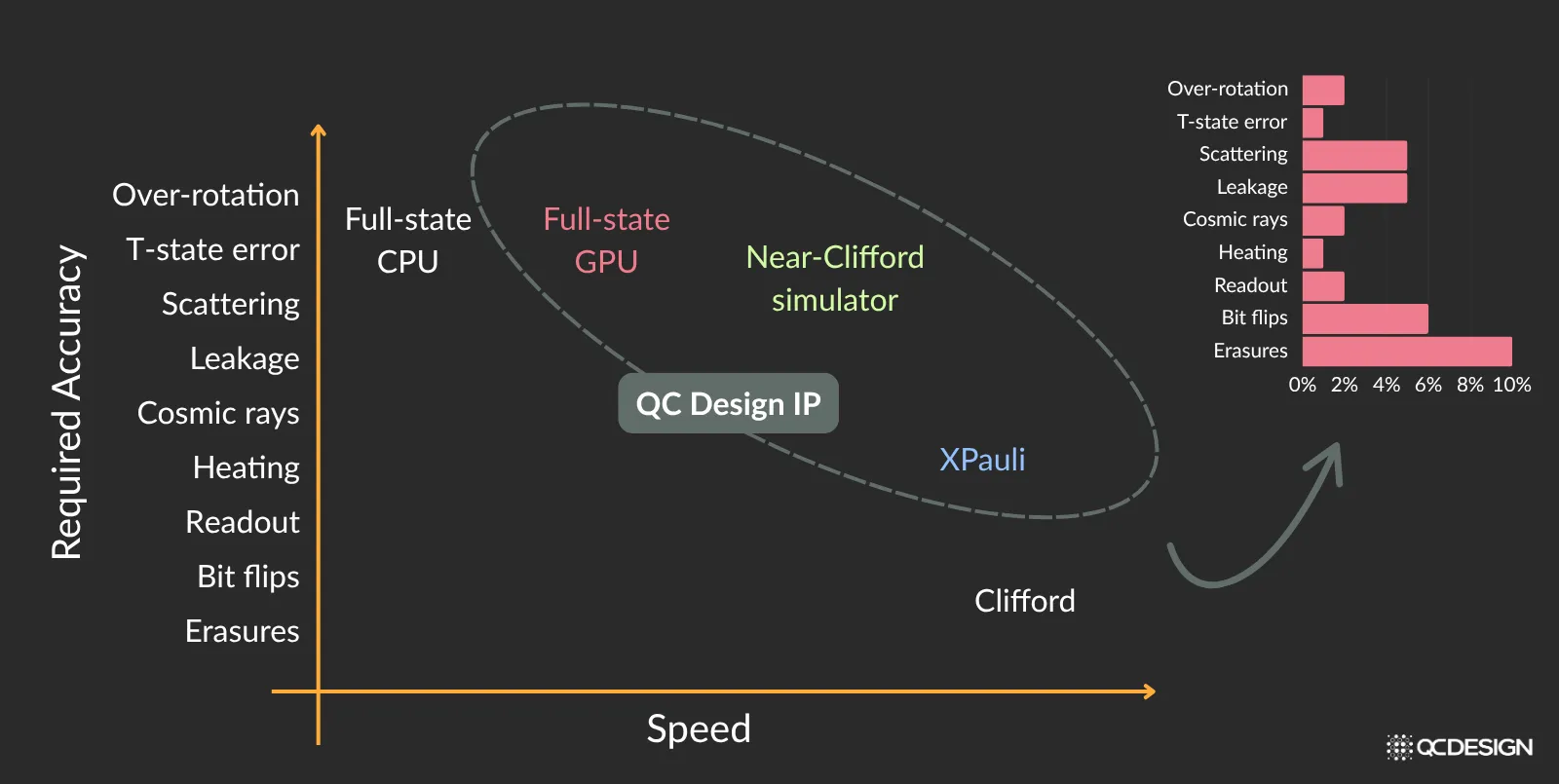

Plaquette has access to five simulators, which have different speeds and accuracies, so hardware manufacturers can choose the right simulator for their needs.

Using Plaquette, you could choose Clifford simulators, which are very fast. Unfortunately, Clifford simulators are limited to only two imperfections, and hardware manufacturers often need to look at many other imperfections. For this, they can use other simulators built directly into Plaquette.

Our extended Pauli (XPauli) simulator can capture important imperfections like leakage and cosmic ray events in superconducting qubits, scattering in neutral atoms, heating in trapped ions, and much more. Our Near-Clifford simulator can deal with any imperfection, which is close to the so-called Clifford Group, and it includes two important classes of imperfections arising from over- and under-rotation, for example, if the pulse acting on a qubit is too long or too short. And also T-states that are very important for universal quantum computing.

In partnership with Nvidia, we also built a full-state GPU simulator that’s optimized for fault tolerant circuits, which can go far beyond traditional full-state CPU simulators (which are also available within Plaquette).

With this set of simulators, you can model:

- many, many more physical qubits than previously possible

- for many different levels of required accuracy

- for many different imperfections on different hardware manufacturer’s systems

What’s the implication of all this? Hardware manufacturers can use Plaquette to find thresholds with respect to all the different imperfections within their architecture. Let’s look at an example.

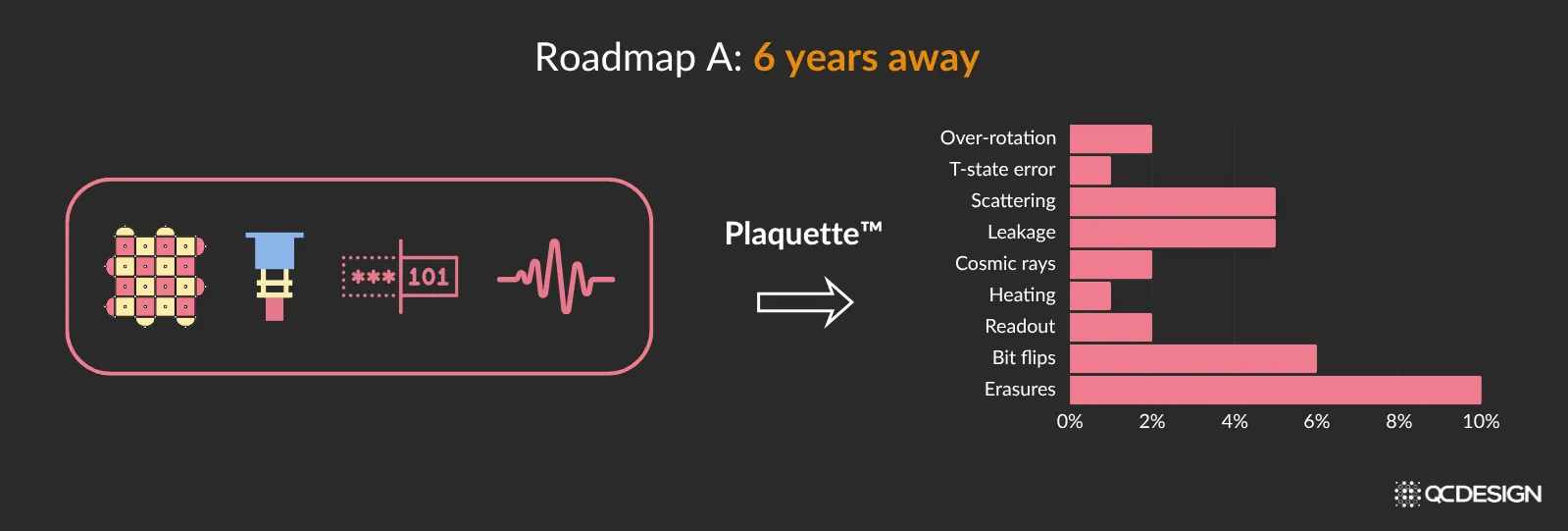

Finding the best path forward

Say a hardware manufacturer has a particular architecture in mind. Specifically, a particular error correction code, a particular decoder, a particular hardware setup, and a particular approach for qubit control.

Given their knowledge of the imperfections in this system, they use Plaquette to compute the thresholds for various imperfections in this architecture and find that the threshold with respect to—in this case—heating is too low.

They know that it would take a long time for the hardware to get below this threshold, meaning that it would take a long time to build a fault tolerant quantum computer with this architecture (in this example, 6 years).

Armed with this information, the hardware manufacturer can go back and design new architectures, using Plaquette as a guide. They iterate quickly, and find architectures that have better tolerances across the board for the imperfections that arise in their systems. These architectures can then be built much sooner (in this example, only 4 years!).

The result is that hardware manufacturers using Plaquette can get to fault tolerance years ahead of their competition.

The big picture

In quantum hardware development, small design decisions can have multi-year consequences. Plaquette gives teams a way to explore those decisions systematically, to test architectures, map their tolerances, and find the best path forward before committing to fabrication. For hardware manufacturers, that can mean reaching fault tolerance not just sooner, but with far less risk.

If you’re building or scaling quantum hardware, Plaquette can show you exactly where your architecture stands, and how to reach fault tolerance faster. Book a demo to see how Plaquette fits into your design workflow.